A recent study by researchers at Mount Sinai School of Medicine in the United States revealed that even the most advanced language models, such as OpenAI's O3, struggle to move beyond stereotypical thinking when confronted with unconventional scenarios in medical contexts.

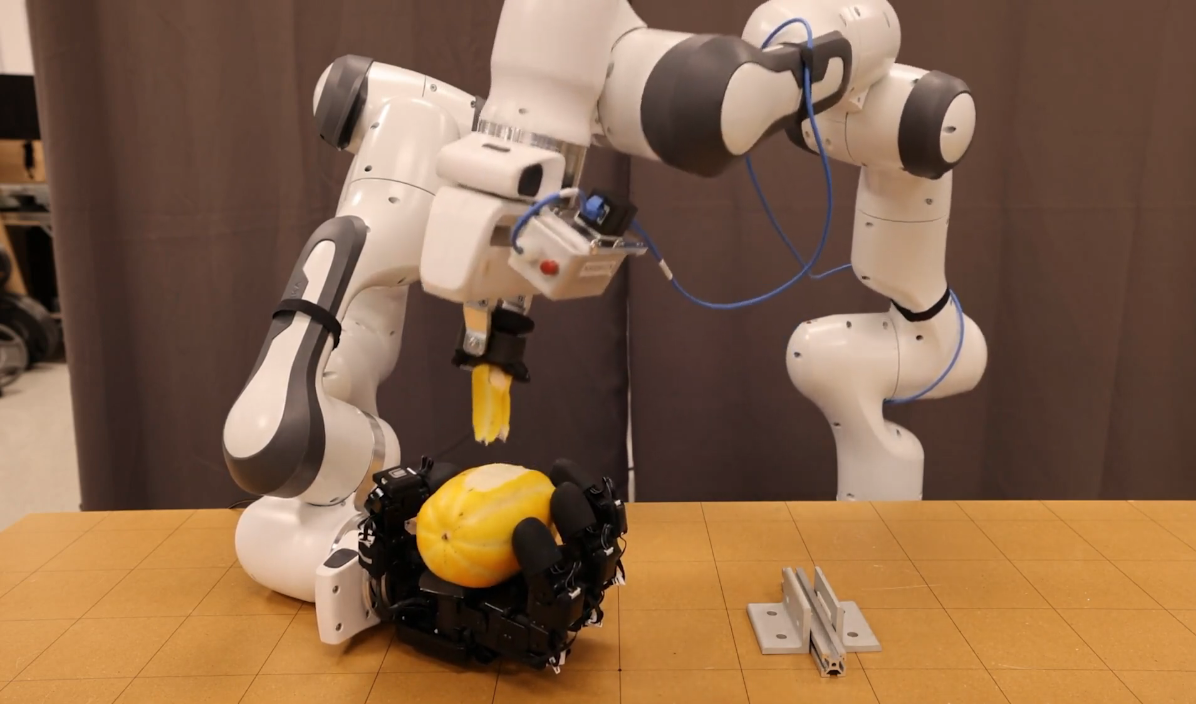

The research team started from a core observation: large language models (LLMs), like ChatGPT, tend to provide typical and common answers in standard medical cases. However, they often fail to grasp or give due importance to subtle changes in scenarios, primarily due to their extensive training on repetitive data and familiar patterns.

The researchers likened the operational style of these models to the "System 1" or "Fast Thinking" described by Daniel Kahneman in his famous book, "Thinking, Fast and Slow." This mode of thinking relies on quick, intuitive responses based on past experiences and is suitable for daily situations that don't require deep analysis. In contrast, "System 2" or "Slow Thinking" depends on deliberate analysis and careful consideration, used for solving complex problems. The study showed that AI models do not shift to this analytical mode of thinking, even when necessary, which explains their disregard for subtle changes in scenarios.

To illustrate this, the researchers designed experiments using commercially available language models with a combination of creative lateral thinking puzzles and well-known medical ethics dilemmas, with slightly altered details.

A prominent example involved a modified version of the classic "Surgeon's Dilemma," a famous puzzle that exposes gender biases. In the original version, a boy and his father are injured in an accident. When the boy arrives at the hospital, the surgeon exclaims, "I can't operate... he's my son!" leading many to assume the surgeon is his mother. However, in the modified version, the researchers explicitly stated that the father was the surgeon and the mother was not. Despite this clear information, some AI models still gave the same stereotypical answer, assuming the surgeon was a woman. This reveals the models' tendency to cling to previous patterns, even when presented with new, contradictory information.

The study's findings were published in the journal NPJ Digital Medicine under the title "Pitfalls of large language models in medical ethics reasoning." It demonstrated that AI tends to favor the quickest or most common answer, often overlooking precise and critical details. In healthcare, where decisions carry sensitive ethical and clinical implications, such oversight can lead to severe consequences.

Therefore, the study emphasized the critical importance of vigilant human oversight when using AI, especially in cases requiring ethical sensitivity, profound judgment, and empathetic communication skills. Despite the significant capabilities of these models, they are not infallible and should be regarded as a supplementary tool that enhances human expertise, not a replacement for it.

Such studies can help highlight the challenges stemming from repetitive training patterns, thereby stimulating the development of technical solutions to improve AI models' ability to make more accurate and responsible decisions in clinical contexts.