In a groundbreaking medical advancement, researchers at UC Davis Health in California have developed a new brain-computer interface (BCI) capable of translating brain signals into spoken language with an unprecedented accuracy of 97%.

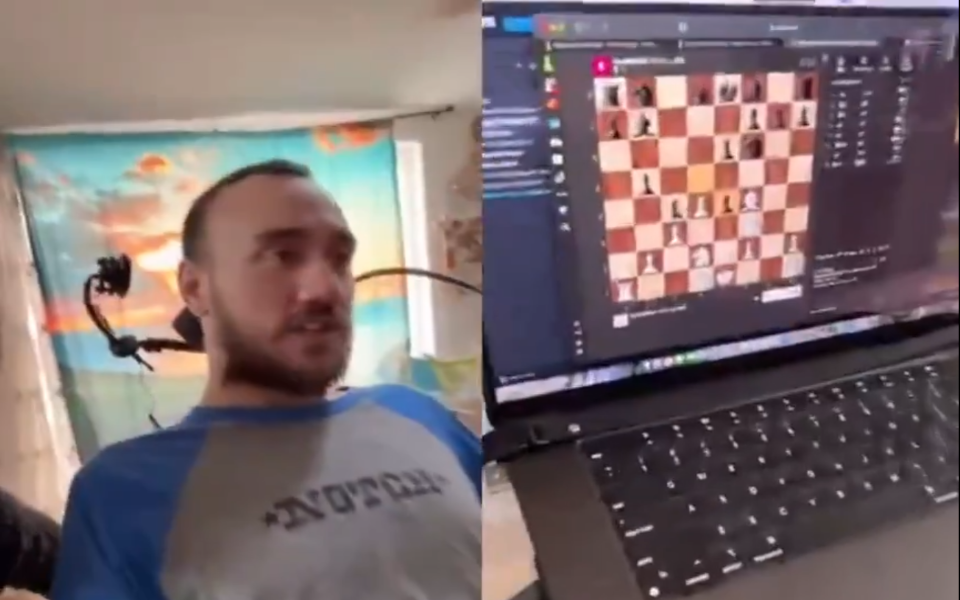

The BCI was implanted into the brain of Casey Harrell, a 45-year-old man living with amyotrophic lateral sclerosis (ALS), a neurodegenerative disease that has robbed him of the ability to control the muscles used for speech.

Detailed in the New England Journal of Medicine, the study involved implanting a BCI with four arrays of 256 microelectrodes in the region of the brain responsible for speech coordination. This allowed researchers to record neural activity associated with speech.

Advanced algorithms and machine learning models were designed to interpret these signals, which are essentially commands to the muscles involved in speech. These signals were then translated into corresponding words, and a separate program vocalized the text.

Increasing Accuracy Through Training

Machine learning models that interpret brain signals require vast amounts of data to perform effectively. Researchers collaborated with Harrell to train the system, starting with a vocabulary of just 50 words. Within 30 minutes, the system achieved 99.6% accuracy in recognizing these words.

As the vocabulary expanded to 125,000 potential words, the accuracy decreased slightly to 90.2%. However, with continued data collection and training over 32 weeks, the system eventually stabilized at an impressive 97.5% accuracy.

Harrell was visibly moved when he saw his thoughts displayed on a computer screen for the first time. The BCI, aided by artificial intelligence, had successfully decoded his speech. Researchers then synthesized his voice using pre-recorded vocal samples to give his thoughts audible form.