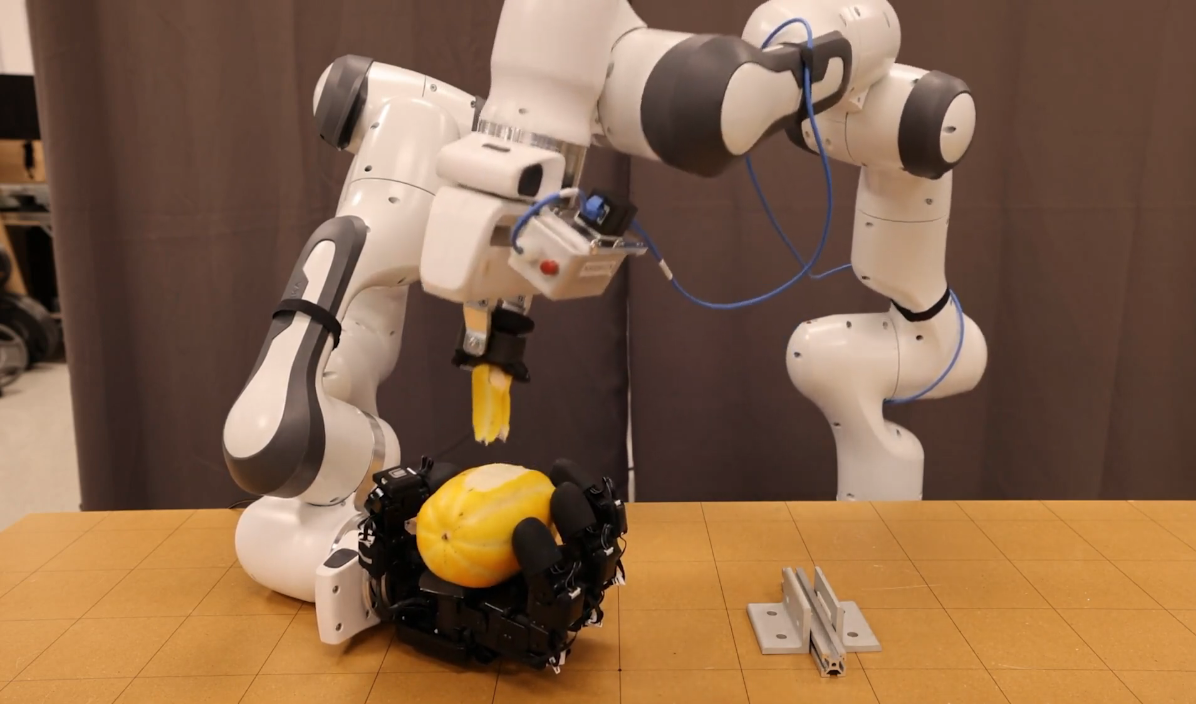

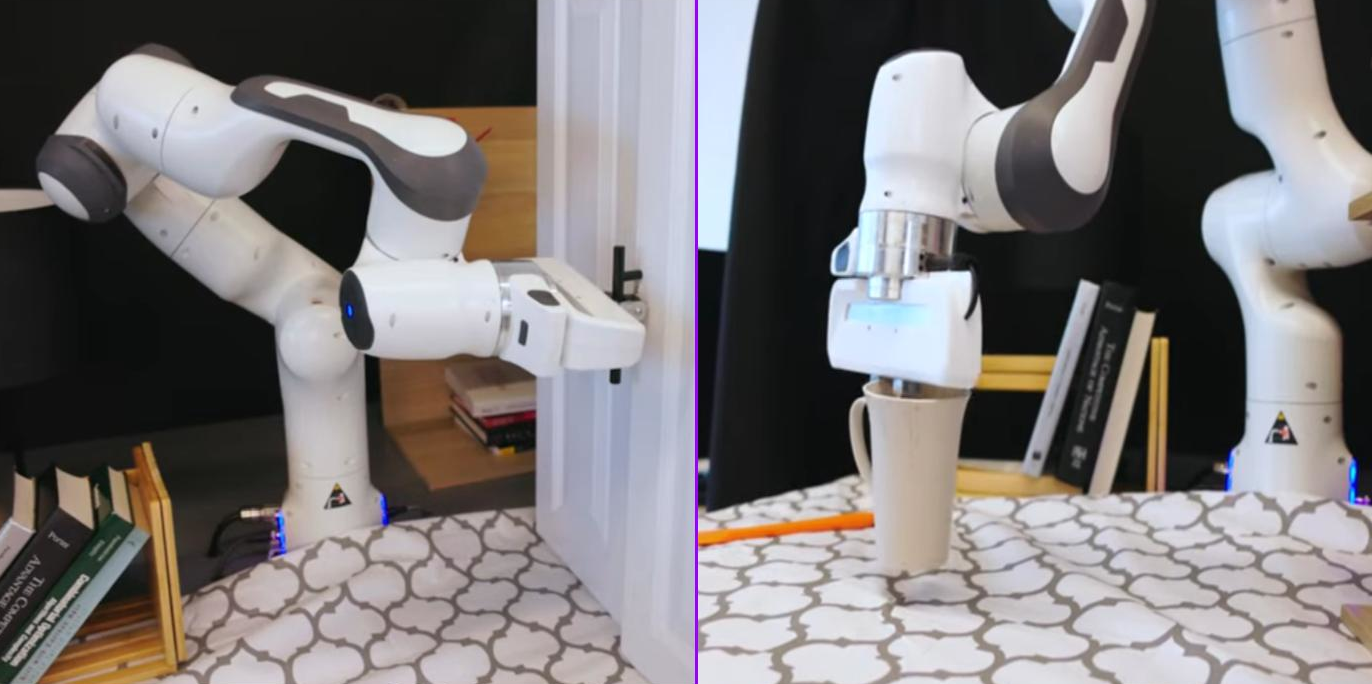

Sports competitions between robots and humans provide a valuable arena for assessing the capabilities of robots and the level they have reached. These competitions don’t just evaluate the robot’s ability to perform a task, like folding a piece of clothing or making a cup of coffee; they also assess its ability to execute these tasks with efficiency, speed, and precision, on par with or even surpassing human performance.

Table tennis is a game that tests a robot’s capabilities from multiple aspects. It challenges the precision of its sensors in capturing the smallest details (like the ball’s position at every moment), as well as the ability of its intelligent systems to analyze this information, assess the opponent’s level, and determine the appropriate response in real-time. Additionally, it tests the robot’s physical ability to move and respond quickly to execute the chosen action at the right moment. Any malfunction in these systems, or even a slight delay, can lead to the robot’s failure and loss of the game.

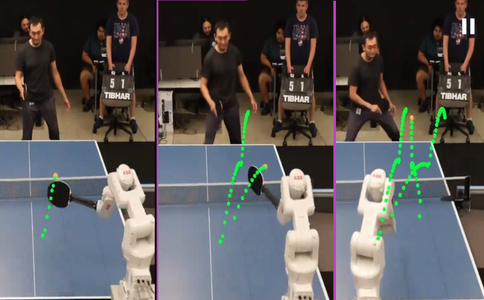

Google has published a research paper along with videos of a robot playing table tennis at an intermediate level. The robot managed to win 13 out of 29 matches. It played against players of various skill levels, defeating all beginners (100% win rate), 55% of intermediate players, and losing to all advanced players (0% win rate).

The robot relies on a hierarchical policy, consisting of a set of low-level skills such as forehand topspin, backhand targeting, or forehand serve. A higher-level control unit understands the game state, the player’s level, and what happened previously, then selects the skill that the robot should execute. This selected skill may not be optimal but is the best for the robot based on its motor capabilities and success rate in executing it.

The robot was trained in simulation using reinforcement learning. After using a set of human matches as a starting point, the models are improved through training in simulation. The experience gained in simulation is then transferred to the physical robot, which then plays against humans to improve its performance and obtain additional examples to further improve its training in simulation.

The results of the matches against humans have demonstrated that the robot can play at an intermediate level. Professional players were able to quickly identify its weaknesses, noting that it was not good at dealing with underspin. This is due to the difficulty of dealing with low balls to avoid hitting the table and determining the ball’s spin in real time.

By leveraging these and other observations, the robot’s performance can be continuously improved until it reaches a level where it can compete with professional players.