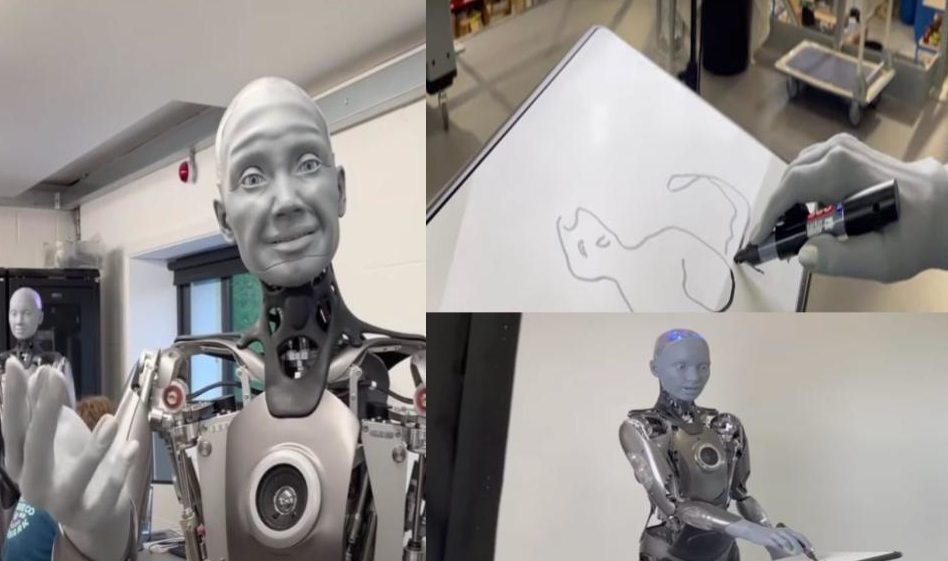

Most robots are designed to work with only one arm and sometimes use both arms for simple tasks like lifting heavy objects that don't require the use of fingers or coordinated hand movements, like tying shoes or hanging clothes. Google DeepMind is working to change this by developing robots capable of using both hands with a high degree of dexterity.

Their new framework, ALOHA Unleashed, leverages a unique approach to robot training. By recording extensive demonstrations of complex tasks on a dual-arm robotic platform called ALOHA 2, researchers can train robots to replicate these actions. This process involves using transformer-based learning architecture trained with a diffusion loss similar to Google Imagen, which is known for generating images from text descriptions. The model iteratively refines its predictions of the robot’s actions, much like Imagen gradually improves an image.

To evaluate ALOHA Unleashed, the researchers tested it on five real-world tasks: hanging a shirt, tying shoes, replacing a robot finger, inserting gears, and organizing kitchen items. Each task was associated with a specific model, trained on approximately 26,000 demonstrations. The results were promising, demonstrating the model’s ability to effectively transfer skills to the robot. [videos]

Looking ahead, the team aims to develop a single, versatile model capable of performing multiple tasks. This would simplify the development process and make it easier to manage multiple models on a single robot.