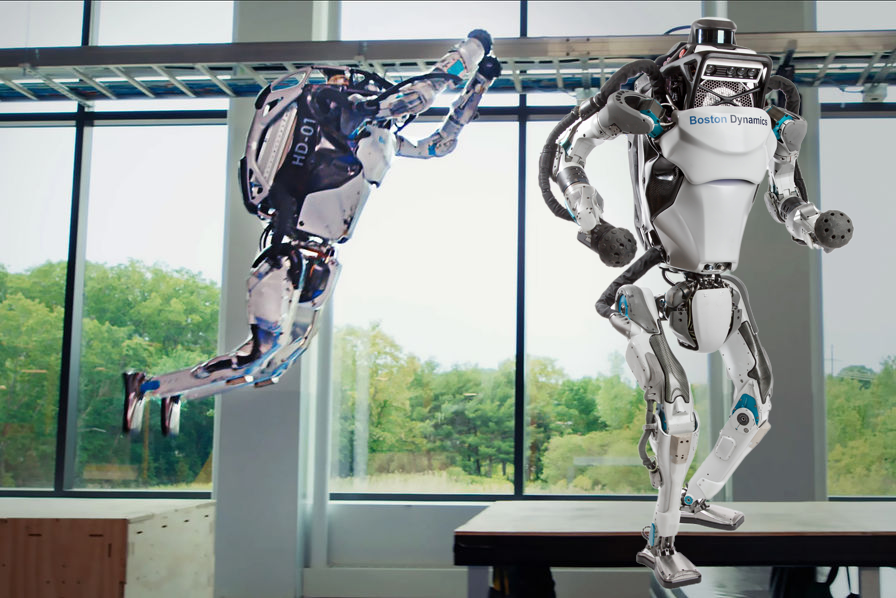

Instructing a robot to Autonomously execute user commands is a complex task involving multiple steps. Initially, the robot must comprehend the user’s command (whether verbal or visual), followed by identifying the corresponding action and determining the necessary steps to execute it. Finally, the robot navigates the surrounding environment to achieve the goal.

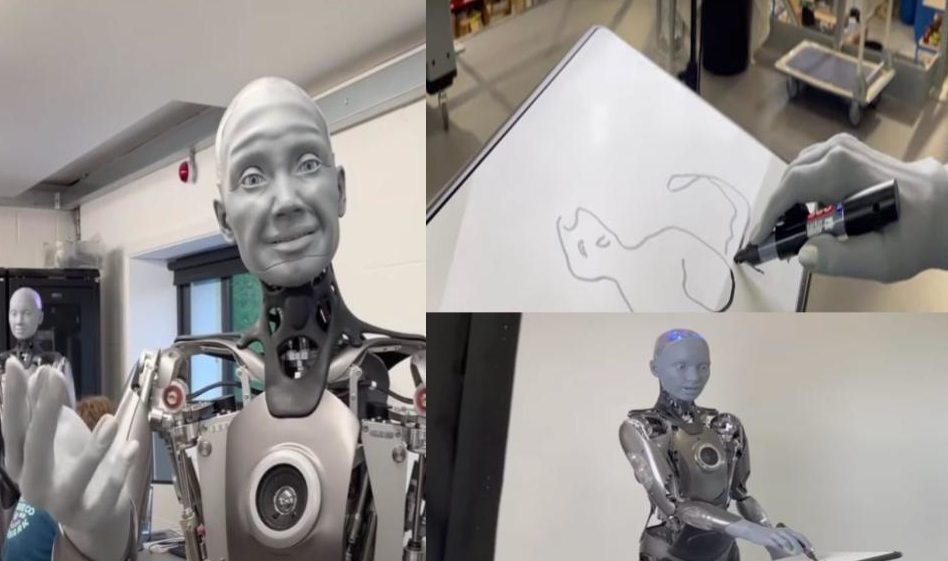

Visual Language Models (VLMs) empower robots to understand their surroundings by analyzing video footage captured by their cameras. These models can respond to user queries about objects or actions within the video. However, for a robot to truly execute commands, it must possess the ability to navigate and interact with the physical environment.

Consider the task of instructing a robot to discard an empty cola can into a trash bin. Since the bin might be situated in a different room and beyond the camera’s range, the robot must have previously explored the environment and mapped it for the VLM. Upon identifying an image of the trash bin, the robot must then navigate towards it.

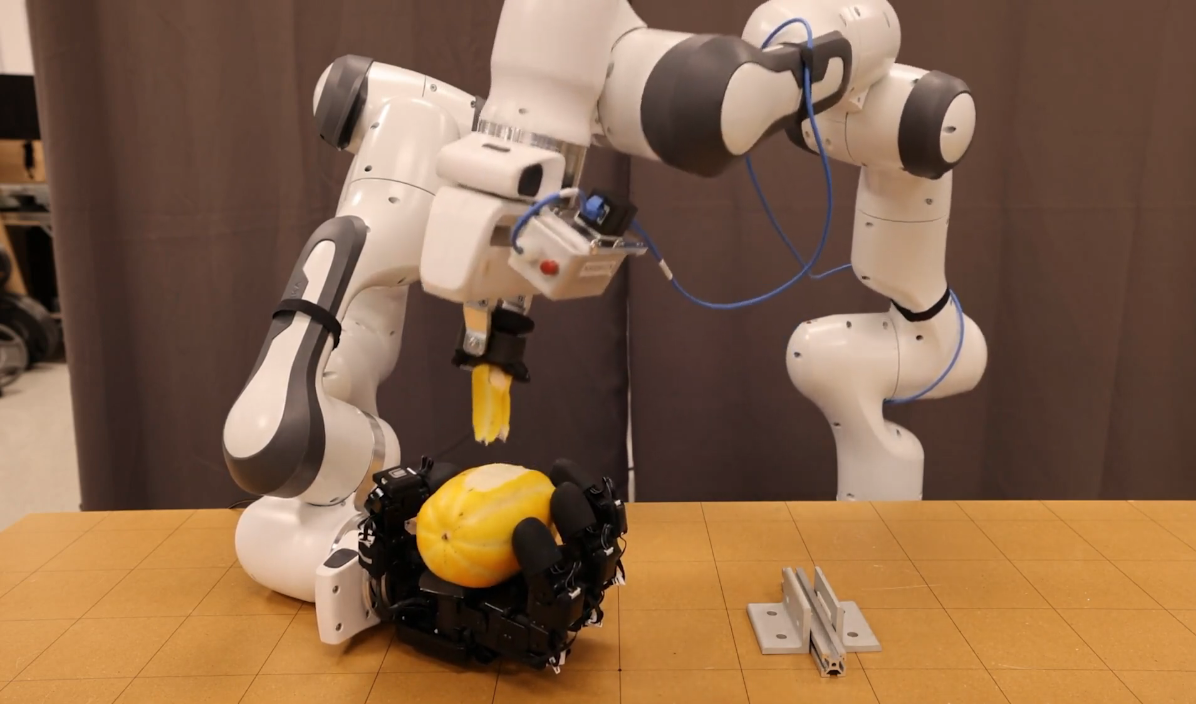

Google’s recent research proposes a novel approach that involves capturing a comprehensive video of the environment and feeding it to a VLM. The company utilized Gemini Pro 1.5, a VLM with an extensive context window capable of handling large amounts of data. From this video, a topological graph of the environment is generated, linking each video frame to its corresponding coordinates within the space. When the VLM gives an image, the robot utilizes this graph to plan its path from its current location to the location of the target object in the image

Google has named this approach Mobility VLA (Vision-Language-Action) which involves pre-recording a video of the environment and using it to create a topological graph. The first is used by a visual language model (VLM) to respond to user requests, while the robot relies on the second one to navigate to the target location identified by the VLM.

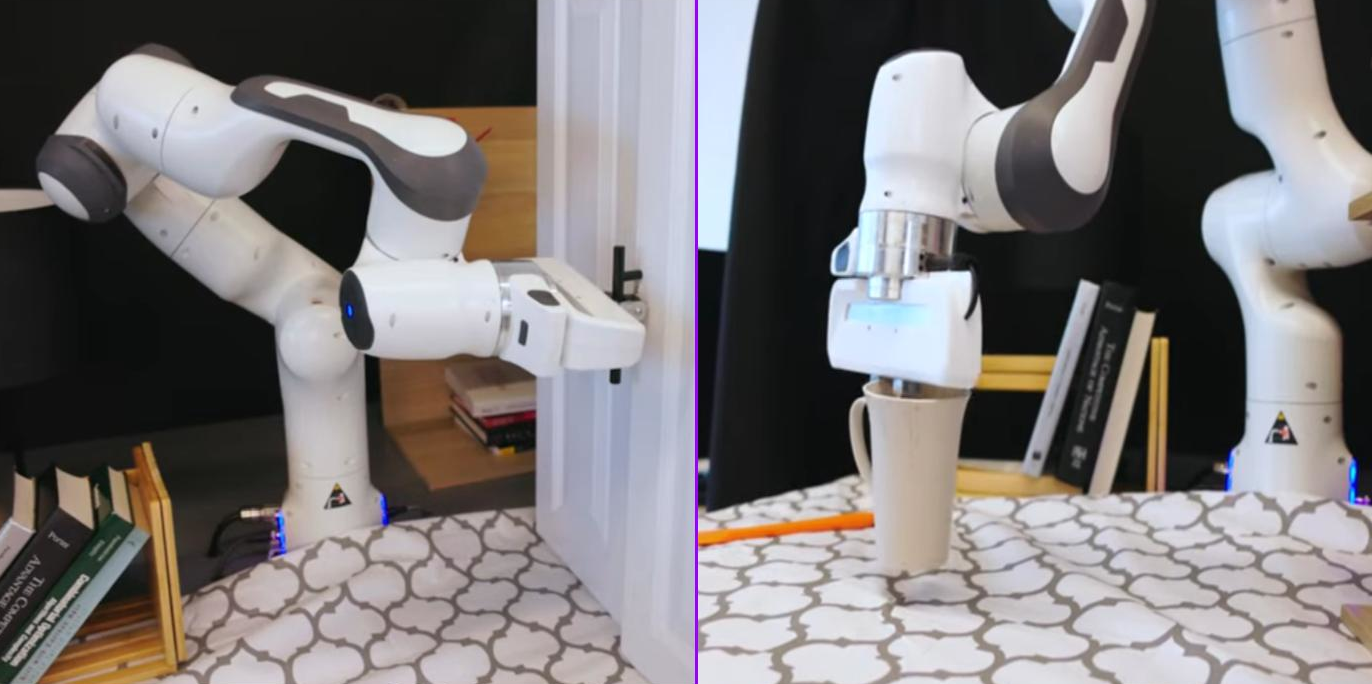

This approach was rigorously tested in the DeepMind office (836m²), achieving success rates of 86% and 90%. These results represent a significant improvement of 26% and 60% over previous approaches. The testing involved complex reasoning tasks, such as identifying locations hidden from public view and executing multi-model user commands.

The promising outcomes of these evaluations demonstrate the effectiveness of Mobility VLA in enabling robots to autonomously respond to commands and navigate within a defined environment. This methodology holds immense potential for advancing the capabilities of robots and expanding their applications in various real-world scenarios.