Google is one of the most important players in the field of artificial intelligence, as its subsidiary, DeepMind, developed the AlphaGo program, which in 2016 beat the world champion in Go, a game renowned for its great complexity and strategic depth. Google has also developed the TensorFlow framework that enables researchers and developers to develop AI programs easily and efficiently.

Google goes beyond only developing the AI software, but has also developed new processing units known as TPUs to accelerate the training and inference process of large AI models that require a huge number of parallel calculations and consume a lot of energy when using traditional processing units such as GPU and CPU.

What are Google TPUs?

TPUs (Tensor Processing Units) are specialized chips designed to accelerate machine learning tasks, particularly those involving neural networks (deep learning). Their design prioritizes both speed and efficiency, aiming to complete the complex calculations required by AI models faster and with lower energy consumption. Initially developed for internal use at Google in 2015, TPUs became available for broader use through Google Cloud services in 2018. Since 2019, Google has also offered a smaller version of the TPU chip suitable for integration into edge devices.

Why is Google developing TPUs?

GPUs (Graphics Processing Units) are workhorses for training and running modern AI models. They excel at parallel processing tasks like matrix multiplication, a fundamental operation in deep learning. However, GPUs have a more general-purpose architecture. While this offers flexibility, a more specialized approach can significantly improve efficiency.

This is where TPUs (Tensor Processing Units) come in. Google developed TPUs specifically for training and running large AI models. Their design prioritizes speed and energy efficiency for these tasks. Compared to GPUs, TPUs can significantly accelerate training times and reduce energy consumption

By developing TPUs, Google strengthens its AI ecosystem, which encompasses everything from applications and models to frameworks and the underlying infrastructure. This comprehensive approach positions Google as a key player with control over the tools needed to develop effective AI.

Types of TPUs

Google offers three types of TPUs. Cloud TPUs are high-performance versions designed for their cloud services, Edge TPUs are smaller and optimized for on-device processing and integrated TPUs in new Pixel phones stand out from standalone Edge TPUs.

1. Cloud TPUs

Google has developed five generations of Tensor Processing Units (TPUs) so far. Initially designed for internal training of their systems, Google now offers TPUs through their cloud services. However, you cannot purchase and operate them independently outside of Google Cloud.

- First generation 2015: This was the initial design of the TPU, and was mainly used for internal research and development at Google.

- Second generation in 2017: The second generation brought improvements in performance and efficiency, and was the first generation to have limited availability on Google cloud service.

- Third generation 2018: This generation marked a significant leap in performance and became the first widely available on Google Cloud services. It introduced a novel architecture called the TPU Pod, which groups multiple TPU chips for collaborative processing.

- 4th Generation 2021: Building on the advancements of prior generations, the 4th generation TPU (TPU v4) not only delivered another leap in performance and efficiency, but also introduced more efficient TPU v4 Pods.

- 5th Generation 2023: The latest generation of TPUs, the V5, boasts significant gains in performance and efficiency. In specific workloads like large language models, it can deliver up to 3x the performance of the previous generation.

2. Edge TPUs

Designed for on-device AI in compact devices, Edge TPUs are small, low-power chips that enable real-time processing of signals from cameras, sensors, and other peripherals. Introduced in 2018, they began powering various Coral-branded devices in 2019.

3. Integrated TPUs

Integrated onto a single chip with CPUs, these TPUs are less efficient than standalone Edge TPUs but offer lower power consumption. Developed specifically for smartphones, they accelerate AI tasks like facial/voice/image recognition, crucial features in modern operating systems and apps. The latest version, the Tensor G2, is a custom chip designed for Pixel phones, integrating elements of a TPU, CPU, and GPU. It aims to deliver enhanced performance and efficiency for machine learning tasks.

TPU Competitors

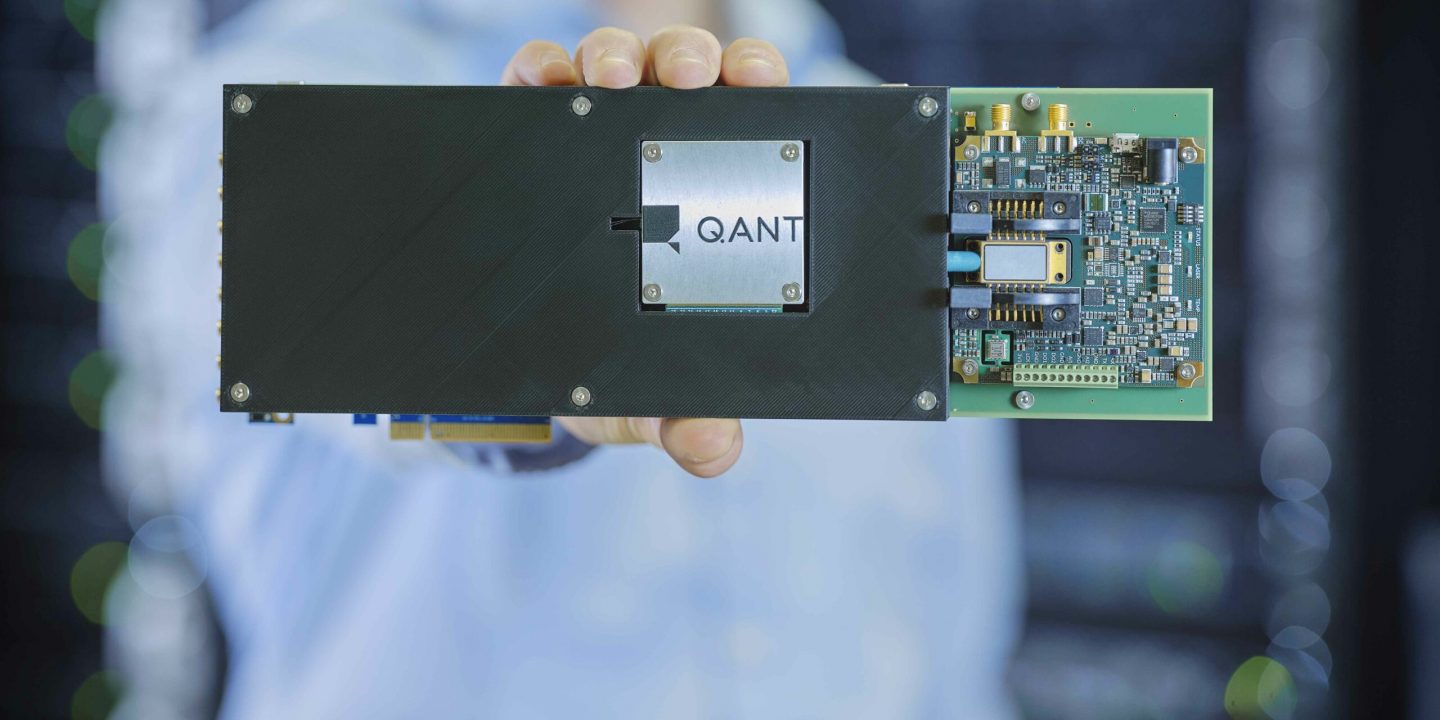

While Google’s cloud service competitors like Amazon and Microsoft rely on NVIDIA GPUs for various computing tasks beyond deep learning, a more direct competitor to TPUs exists. NVIDIA’s DGX (Deep Learning Graphics eXchange) system is a server specifically designed for deep learning workloads. These systems combine high-performance NVIDIA GPUs with optimized networking and storage components to efficiently handle the demands of deep learning.

Microsoft and Meta have explored custom AI accelerators, but Nvidia GPUs remain their primary choice for most workloads. Google recognizes this user preference and the suitability of Nvidia GPUs for smaller models. Therefore, Google offers them alongside its Cloud TPUs within its cloud services. However, unlike DGX systems, Google doesn’t directly sell or integrate DGX within its offerings.

Weaknesses of TPUs

A key weakness of TPUs lies in their hyper-specialization for specific types of deep learning models. While this specialization offers significant performance gains, it can make them less versatile. Rapid advancements in deep learning architectures could render them less effective for new models, potentially requiring expensive replacements.

This ties the lifespan of TPUs directly to the evolution of deep learning models, which experience frequent changes. Since Google utilizes a vast number of TPUs for its AI systems, replacing outdated models becomes a significant logistical and financial challenge.

What is the future of TPUs?

TPUs are a fundamental building block of Google’s AI ecosystem, and Google constantly strives to improve them. This development prioritizes two key areas:

- General performance improvements: This includes increasing the number of calculations TPUs can perform while reducing their energy consumption.

- Specialization for specific tasks: Google tailors TPUs to accelerate training and inference for specific models like large language models or audio/video generation models.