Artificial intelligence has ushered in a new era of computing, giving rise to previously unimaginable devices. Without AI, the Apple Vision Pro headset wouldn’t exist, the Rabbit R1 wouldn’t draw users solely for its orange color, and the AI Pin from the Humane company wouldn’t garner interest without the “AI” in its name.

Processor manufacturers like Intel and AMD have adapted to the trend of AI computing, avoiding the fate of companies like Nokia and Kodak. They have done so by launching a new generation of personal computers: AI PCs.

What is an AI PC?

An AI-enabled PC, also known as an AI PC, differs from a personal computer only by using a special processing unit to accelerate the work of AI models.

Personal computers typically have two processing units:

- Central Processing Unit (CPU): This unit performs the essential arithmetic and logical operations required by the operating system and various programs.

- Graphics Processing Unit (GPU): This unit handles processing tasks specific to graphics programs and games, demanding high computational power for visual rendering.

AI PCs, in addition to a CPU and GPU, integrate a dedicated Neural Processing Unit (NPU) specifically designed to efficiently run AI models.

While the CPU can handle any process, including running AI models, it is typically slow and inefficient for this specific task. The GPU, on the other hand, excels at parallel calculations, making it suitable for both AI and gaming applications. However, the NPU stands out for its dedicated architecture specifically designed to run deep learning models, a type of AI based on neural networks. This specialized design allows NPUs to outperform CPUs and GPUs in terms of speed and energy efficiency when handling these specific models. However, unlike CPUs and GPUs, NPUs are not general-purpose processors and are not well-suited for other types of tasks.

Why do we Need an AI PC?

Although most AI models like ChatGPT, Gemini, and Copilot run in the cloud, eliminating the need for personal computers, their use can still expose the privacy of individuals and companies. Companies relying on these AI models for work tasks risk exposing their data and internal information. This potential security concern has led at least 14 companies to prohibit or restrict their employees’ use of ChatGPT, according to Business Insider.

As these AI models become more ingrained in our lives, we entrust them with increasing amounts of personal and professional data. People who prioritize privacy and limit information sharing often prefer to run AI models locally on their PCs; in addition, the wider trend suggests that AI will become increasingly embedded in the everyday software we use on our PCs. In both scenarios, NPUs play a crucial role in accelerating app performance, keeping pace with the growing demands for computational power.

For example, applications for converting text to speech rely on AI models to generate human-like voices. Design and graphics software are also utilizing AI to remove sections of images, replace them with new elements, or modify backgrounds. Earlier this year, Microsoft announced the addition of a CoPilot button on the keyboard of Windows computers, allowing CoPilot to run directly on the user’s device, or at least part of it, to provide assistance.

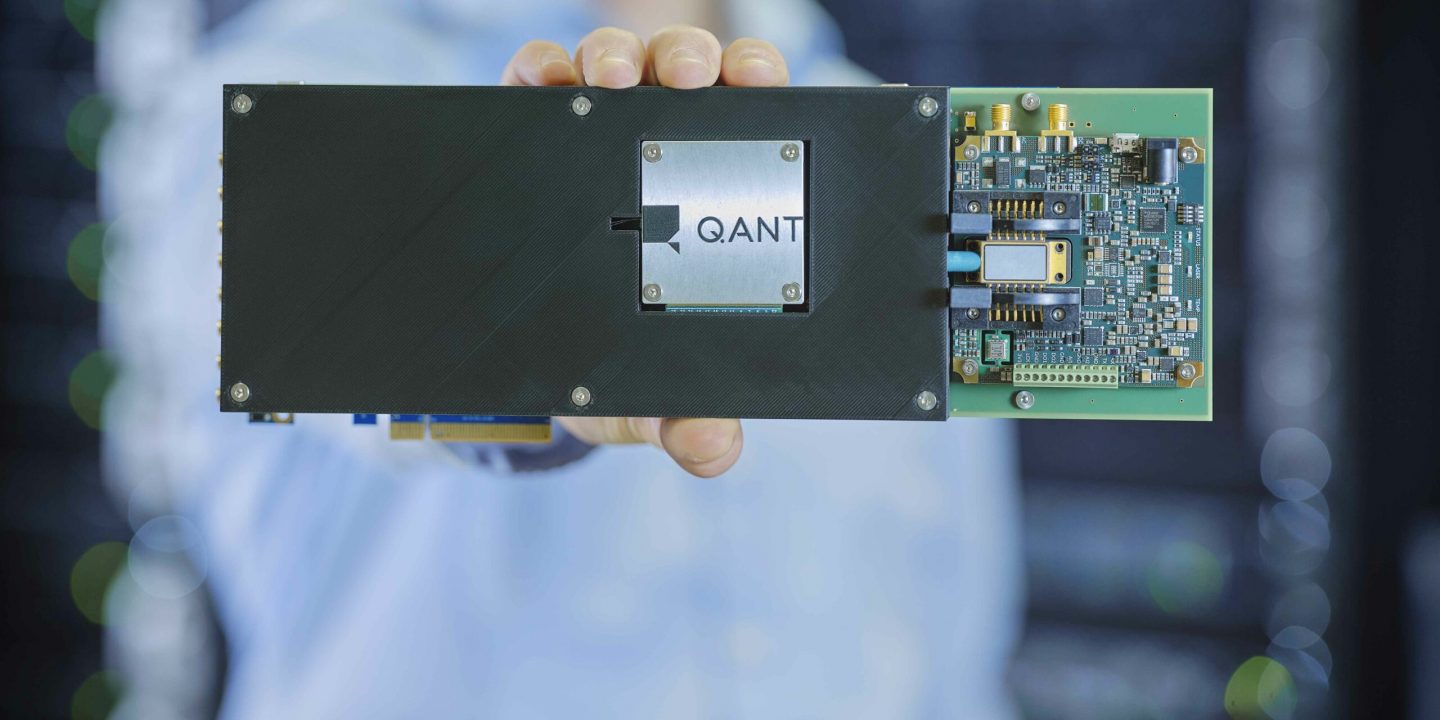

Who are the major manufacturers of NPUs?

- Apple pioneered the integration of a dedicated neural processing unit (NPU) into its computer chips. In 2021, they announced its integration in the M1 processor and continued to develop the technology with subsequent M-series processors. However, Apple refers to this unit as the AI Engine, not the NPU.

- In 2023, AMD began integrating the NPU into the Ryzen 7040HS series of processors, naming it “Phoenix.” They are currently in the process of launching a second version for the Ryzen 8040 series under the name “Hawk Point,” with a further generation planned for next year called “Strix Point.”

- Earlier this year, Intel launched the Intel® Core™ Ultra series of processors under the “Meteor Lake” codename. The first computer powered by these processors, the Samsung Galaxy Book4, was launched just a few days ago.

The success of these new chips hinges on software developers’ willingness to incorporate AI models that can run on them. To this end, chip manufacturers collaborate with software developers to facilitate their development process and to improve the efficiency of their models on these chips.

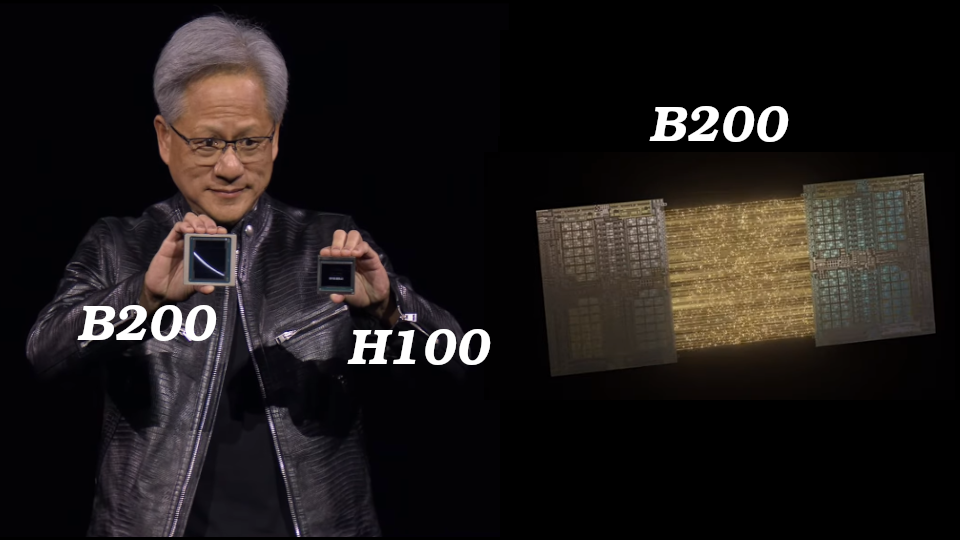

Are NPUs threatening Nvidia’s throne?

Nvidia, the leading manufacturer of AI chips, saw its market value surpass $2 trillion last month. With its H100 chip being the most widely used in data centers and its GPUs powering AI training and inference on personal computers, the company holds the largest market share. However, the emergence of other manufacturers developing NPUs could pose a future challenge to Nvidia’s dominance in PC market.

A few days ago, NVIDIA released its new RTX 500/1000 chips for laptops. These chips boast potential capabilities tens of times greater than the NPUs currently integrated into processors from Intel, Apple, and AMD. While NVIDIA acknowledges the usefulness of NPUs for running less demanding models, the company emphasizes that the RTX series remains the preferred option for tackling complex AI tasks.

The future of AI PCs

The usefulness of AI PCs hinges on the availability of AI software. This market is poised for significant growth in the coming years, with AI models likely undergoing a gradual shift from the cloud to personal computers.

However, similar to the historical trend with gaming, Nvidia’s GPUs have become the go-to option for computationally demanding games, while CPU-integrated graphics remain less utilized. This pattern might repeat with NPUs, potentially limiting their usefulness and widespread adoption.

In Addition, the future of NPUs hinges on continued advancements in deep learning technology. Consequently, any significant breakthroughs in AI that lead to more efficient methods for building intelligent systems will likely necessitate modifications to existing NPUs or even the development of entirely new architectures.