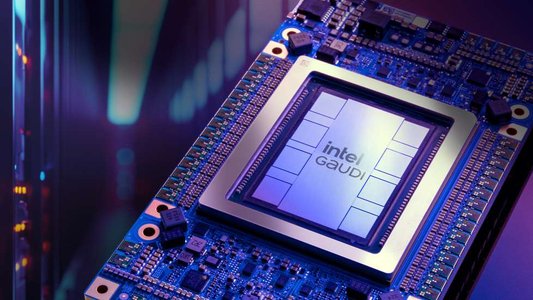

Intel unveiled its next-generation AI accelerator, the Intel® Gaudi® 3, targeting enterprise generative AI (GenAI) applications. General availability is expected in Q3 2024, positioning it as a competitor to Nvidia and AMD in the AI accelerator market.

Compared to its predecessor, Gaudi 2, the new chip brings significant performance boosts. It doubles the number of Tensor cores (from 24 to 64) and Matrix Math Engines (from 2 to 8). Additionally, it offers increased memory capacity (128 GB vs. 64 GB) with higher bandwidth (3.7 TB/s vs. 2.4 TB/s) and utilize high-speed connectivity with 24x 200Gb Ethernet ports.

Gaudi 3 is expected to deliver 4x more computing power, double the network bandwidth, and 1.5x higher HBM memory bandwidth to tackle the ever-growing size of Large Language Models (LLMs) without performance degradation.

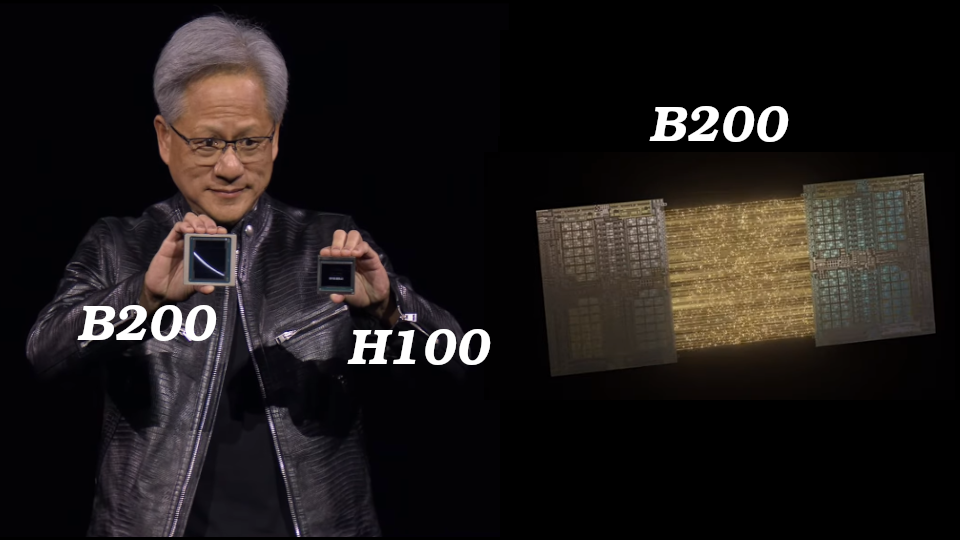

Intel vs Nvidia

Intel claims Gaudi 3 outperforms Nvidia’s H100 in several key areas. Notably, it boasts up to 1.7x faster training times for large language models like Llama2–13B and GPT-3 175B and delivers 1.5x faster inference.

Since Nvidia’s Blackwell B200 isn’t released yet, a direct comparison with Gaudi 3 isn’t possible. Additionally, independent benchmarks are needed to verify Intel’s performance claims regarding the H100.

Intel claims that Gaudi 3 can leverage its high-speed Ethernet ports to build scalable racks and powerful clusters containing thousands of units. This capability positions Gaudi 3 as a potential competitor to Nvidia’s AI factory solutions.