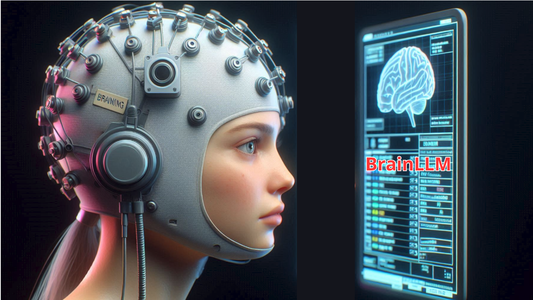

Imagine wearing a helmet that reads your thoughts and instantly writes them down in front of you. Wouldn’t that revolutionize communication? Couldn’t it help solve the challenges faced by individuals with speech difficulties, while making it easier and faster to express our ideas and convey them to machines or other people? This is precisely the goal of the brain-language model known as “BrainLLM.”

An international team of researchers from China, Denmark, and the Netherlands spearheaded the development of this model, harnessing the power of large language models like ChatGPT and their ability to generate coherent and cohesive text based on textual inputs. The team adapted this technology to convert electrical brain signals — recorded via functional magnetic resonance imaging (fMRI) scanners — into words that accurately reflect what’s on a person’s mind.

This new approach stands out from previous experiments, which relied on a limited set of pre-defined sentences or words. In those earlier studies, artificial intelligence models were trained to produce specific outputs in response to brain signals strongly associated with those pre-set phrases. However, this older method restricted the variety of generated sentences, as well as their coherence and consistency, limiting their ability to fully capture the ideas embedded in brain recordings.

Rather than modifying the language models themselves, the researchers focused on transforming brain recordings into suitable inputs akin to textual data. To achieve this, they developed an artificial neural network that converts these recordings into representations resembling those of words. They treated the brain signals, which have a temporal dimension, as a sequence of time segments — each segment representing a word — turning the recordings into a continuous string of words, much like a text sequence.

Dubbed the “Brain Adapter,” this network was trained using texts paired with brain recordings obtained from MRI scans of individuals listening to or reading specific phrases. As a result, the brain signals carried the meaning of those phrases, while the texts directly corresponded to and complemented the recordings. The process entails the Brain Adapter transforming the recordings into text-like representations, which are then seamlessly integrated with the text in the language model. This model trains the Brain Adapter by attempting to recreate the exact text the person was reading or hearing during the recording.

After training, the “BrainLLM” model was tested and demonstrated clear superiority over previous traditional models. It excelled at generating sentences that more accurately expressed a person’s thoughts, while maintaining uniquely coherent and consistent language. However, when tested across multiple individuals, its accuracy varied. Although the human brain functions similarly across people, each individual generates unique brain signals to express the same idea, explaining the observed differences in results.

The researchers also noted that using brain recordings from specific regions, such as Broca’s area and the auditory cortex, improved accuracy. These areas play critical roles in processing and producing natural language.

Despite these achievements, significant improvements are still needed before the model can be practically applied. Suggested enhancements include focusing on brain regions tied to language formation, customizing the system to account for individual differences, and adopting wearable electroencephalography (EEG) devices. Unlike the precise but impractical fMRI scanners, which are confined to experimental and research settings, EEG offers a more feasible alternative.

This research marks a promising step toward future applications in treating speech disorders and developing brain-computer interfaces, bringing us closer to decoding and deeply understanding brain signals.