The substantial effort, cost, and iterative nature of scientific research often slow down progress. Google is addressing this by providing scientists with its AI Co-Scientist, a multi-agent AI system that works as a virtual scientific collaborator to help generate novel hypotheses, develop research proposals, and accelerate scientific discoveries.

How Does Google AI Co-scientist work?

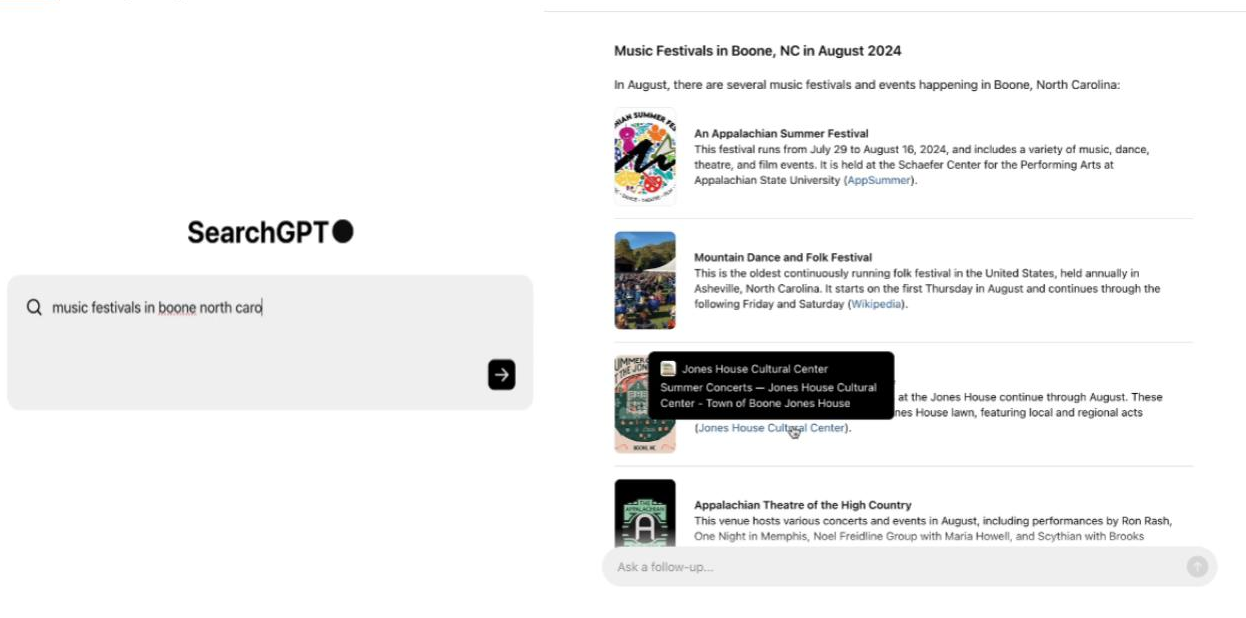

From an external perspective, it functions similarly to other advanced AI chatbots like OpenAI’s O3 or Deepseek R1. Scientists interact with the system using text prompts — starting with an initial research goal, refining it as needed, and guiding the system’s progress.

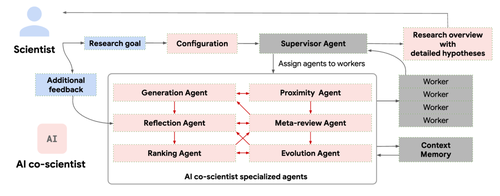

The Co-Scientist operates using a multi-agent system, managed by a Supervisor agent that assigns tasks to dedicated agents and oversees execution.

The Configuration agent begins by parsing the research goal to create a structured research plan. This plan defines proposal preferences, constraints, and key objectives for generating research proposals.

Based on this configuration, the Supervisor agent sets up a task queue and coordinates specialized agents. These agents are designed to mimic scientific reasoning, allowing them to generate innovative hypotheses and research plans. They all use Gemini 2.0 and can also interact with external tools, such as web search engines and specialized AI models.

The Generation Agent kickstarts the research process by identifying key focus areas, refining them iteratively, and formulating initial hypotheses and proposals.

The Reflection Agent acts as a scientific peer reviewer, assessing the correctness, quality, and novelty of hypotheses and research proposals

The Ranking Agent organizes an Elo-based tournament to evaluate and prioritize research proposals through pairwise comparisons and simulated scientific debates, enabling iterative improvements.

The Proximity Agent computes similarities among hypotheses, remove duplicates and optimize exploration of the hypothesis landscape.

The Evolution Agent iteratively refines the top-ranked hypotheses generated by the Ranking Agent, synthesizing ideas, drawing on analogies, leveraging literature, and enhancing clarity for deeper insights and improved coherence.

The Meta-Review Agent facilitates continuous improvement by synthesizing review insights, identifying debate patterns, optimizing agent performance, and refining hypotheses into a comprehensive research overview for the scientist’s evaluation.

Comparison with Reasoning LLM Models

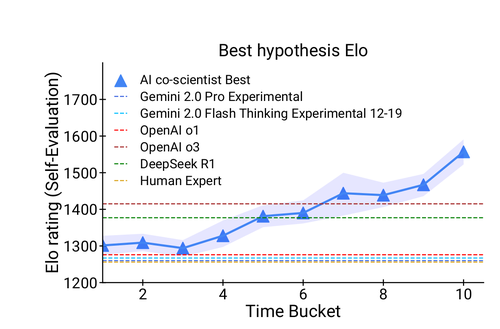

To compare the Co-Scientist with other state-of-the-art LLMs and reasoning models (Gemini 2.0 Pro Experimental, Gemini 2.0 Flash Thinking Experimental 12–19, OpenAI O1, OpenAI O3-Mini-High, and DeepSeek R1), researchers analyzed 15 research goals selected as challenging problems by seven biomedical experts. Each goal was carefully structured with a title, clear objectives, preferred biological or disease areas, desired solution attributes, and constraints on experimental techniques.

The experts provided their “best guess” hypotheses or solutions, and the outputs of the models were compared with these expert guesses, as well as with the Co-Scientist’s proposals. Performance for each curated goal was then assessed using the Co-Scientist’s Elo rating metric.

Co-Scientist outperformed other frontier LLMs and reasoning models in Elo rating with increased computational resources for iterative improvement. Notably, newer models like OpenAI O3-Mini-High and DeepSeek R1 performed competitively while using significantly less compute and reasoning time. Importantly, no performance saturation was observed, indicating that scaling test-time compute could further enhance Co-Scientist’s result quality.

Furthermore, the co-scientist significantly outperformed the human experts as measured by the Elo metric. However, it’s important to remember that Elo is auto-evaluated — it doesn’t measure ground truth or scientific accuracy directly but evaluates relative effectiveness in terms of success at addressing the research goals. This means the Elo rating can sometimes favor certain attributes (like novelty or comprehensiveness) over factors like alignment with expert preferences or accuracy.

Evaluating the Co-Scientist on Real-World Scientific Problems

Since the Elo metric isn’t always fully accurate, the Co-Scientist developers conducted an additional test focused on evaluating the system in a real-world scenario. They performed three wet-lab experiments, one of which involved generating hypotheses to explain the evolution of bacterial gene transfer mechanisms related to antimicrobial resistance (AMR) — the ways microbes have evolved to resist infection-fighting drugs.

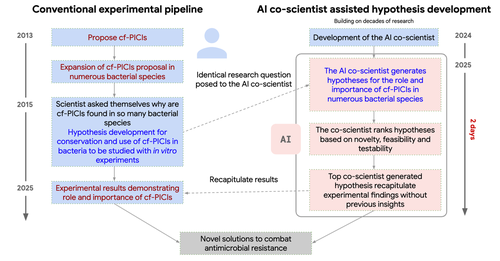

Imperial College London scientists have spent a decade studying superbugs resistant to antibiotics, focusing on how certain bacteria contribute to antibiotic-resistant infections, a growing global health challenge. In collaboration with the Fleming Initiative, which works to control antimicrobial resistance, Google tasked the ICL team with testing the AI Co-Scientist on the same problem.

The researchers asked the AI system to explore a topic they had already made a novel discovery on, but which had not yet been made public: explaining how capsid-forming phage-inducible chromosomal islands (cf-PICIs) exist across multiple bacterial species.

The AI Co-Scientist independently proposed that cf-PICIs interact with diverse phage tails to expand their host range. This discovery, which had been experimentally validated by the team before using the AI system, aligns with their previous findings, which are now published in co-timed manuscripts with collaborators at the Fleming Initiative and Imperial College London.

This highlights the value of the AI Co-Scientist as an assistive technology, as it was able to leverage decades of research and prior open-access literature to arrive at the same conclusions in a fraction of the time (2 days).

Conclusion

In conclusion, the AI Co-Scientist demonstrates significant potential in fields that demand complex data analysis and hypothesis testing, such as drug discovery and antimicrobial resistance research.

While it does not fully automate knowledge production, it greatly accelerates and streamlines the research process. This allows researchers to focus more on the creative and conceptual aspects of their work, driving meaningful scientific advancements across a wide range of disciplines.

By enhancing efficiency and supporting innovative thinking, the Co-Scientist system is poised to play a key role in shaping the future of scientific discovery.