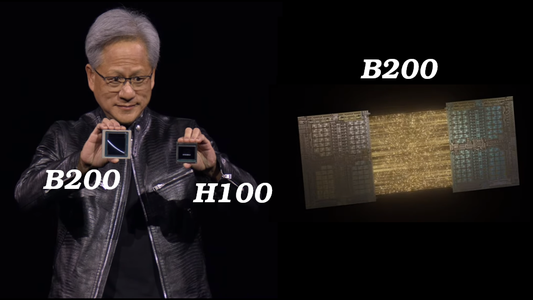

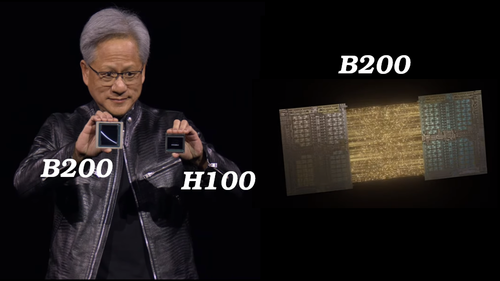

Nvidia, having recently surpassed a $2 trillion market cap fueled by its dominance in the AI chip market, particularly the powerful H100, continues to push boundaries. At GTC 2024, the company unveiled the Blackwell platform, a next-generation architecture specifically optimized for artificial intelligence tasks. Named after mathematician David Blackwell, Blackwell succeeds the Hopper architecture, which debuted in 2022 and forms the foundation for the H100 GPU chip.

However, Nvidia emphasizes Blackwell as more than just a new GPU chip. “Blackwell’s not a chip, it’s the name of a platform,” stated CEO Jensen Huang. “Hopper is fantastic, but we need bigger GPUs.”

1- B200: the largest and most effective chip

Competitors did not succeed in overthrowing the H100 chip, so NVIDIA decided to do it itself. B200 is not a single chip, but rather a multi-die architecture that combines two dies into one GPU. These dies are connected with a high-speed NV-HBI connection of up to 10 terabytes per second, which makes them work exactly as a single chip. This approach bypasses the limitations of current single-chip manufacturing for AI tasks.

The Taiwanese company TSMC manufactures the B200 with a 4NP process dedicated to NVIDIA. It contains a total of 208 billion transistors, 2.5 times more than the H100. However, considering it consists of two dies, each die contains 104 billion transistors, a 25% increase over the H100’s 80 billion transistors. The B200 utilizes 192GB of HBM3E memory with a bandwidth of 8 TB/s. All of this allows it to achieve 2.5 times faster performance at FP8 (20 petaflops of computing power) and 5 times faster at lower-precision FP4 (40 petaflops).

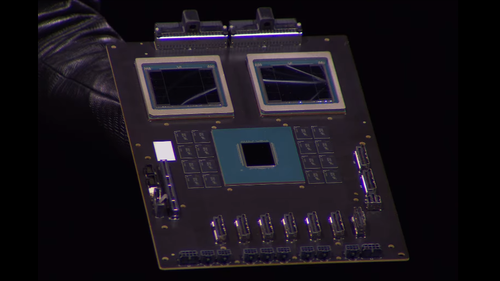

2- GB200 Super Chip

Nvidia has unveiled a superchip design, the GB200. This design combines two B200 GPUs and a Grace CPU. The NVLink-C2C technology interconnects these GPUs with the CPU at a high bandwidth of 900 GB/s, while the system utilizes 384 GB of HBM3E memory.

The result is that the Grace Blackwell GB200, shown above, boasts 40 petaflops of computational power and 846 GB of fast memory. This design delivers superior performance, particularly in the inference process for large language models like ChatGPT. Compared to the H100 chip, B200 in Gb200 offers a 30x speedup in inference and a 4x speedup in training, all while achieving a significant reduction in energy consumption — up to 25 times lower.

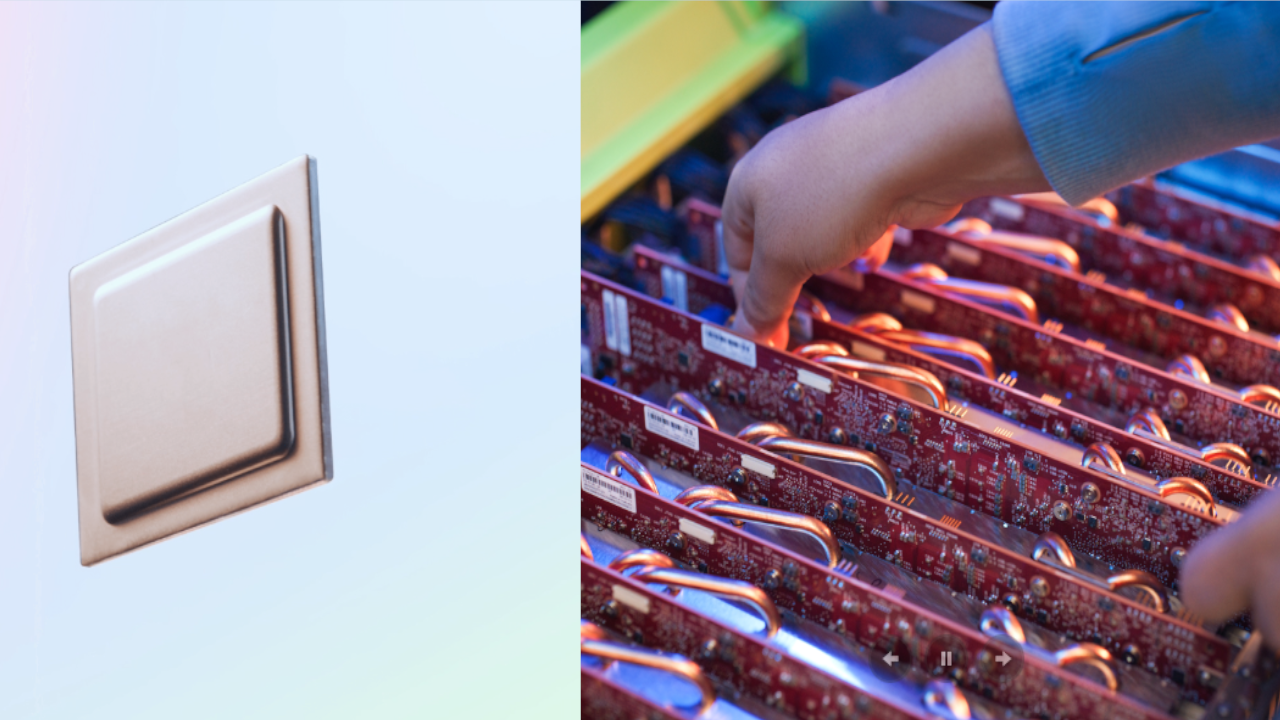

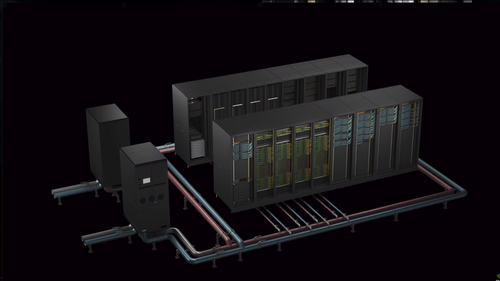

3- SuperComputer: DGX GB200 NVL72

To tackle large-scale AI tasks, Nvidia integrates their Grace Blackwell Superchips (GB200) into high-density systems like the DGX GB200 NVL72. This supercomputer packs 36 Grace CPUs and 72 GB200 GPUs into a single, liquid-cooled rack. Each tray within the rack contains either two GB200 chips or two NVLink adapters, with 18 trays containing GB200 chips and nine equipped with NVLink adapters.

The GB200 NVL72 system boasts 720 petaflops of training performance and 1.4 exaflops of inferencing performance. It houses nearly two miles of cabling, containing 5,000 individual cables. It has a memory of 30 terabytes and functions as one giant GPU to run and train AI models containing trillions of parameters. While rumors claim GPT-4 has 1.76 trillion parameters, the GB200 NVL72 can support models with 27 trillion parameters.

4- DGX Superpod

Eight of these supercomputers join forces to create a monster machine known as the DGX Superpod. Like the one pictured, these Superpods are liquid-cooled and pack a staggering 576 GPUs, 288 CPUs, and a whopping 240 terabytes of memory. This powerhouse delivers a combined performance of 11.5 exaflops at FP4, making it ideal for tackling massive AI tasks.

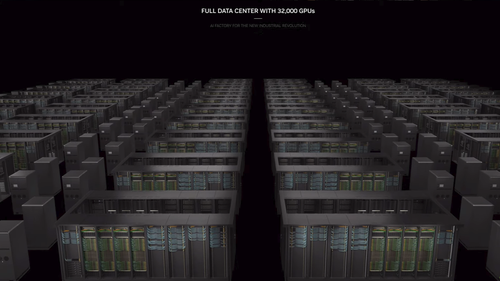

5- AI Factory (Data Center)

NVIDIA systems can have up to tens of thousands of 200GB super chips connected together in high-speed networks. These massive systems are what Johnson Huang likes to call “AI factories,” not data centers.

Huang sees a parallel between these AI factories and the factories of the previous industrial revolution. Back then, water powered generators to produce electricity. In this new industrial revolution, data and electricity are the fuel for these “AI factories,” powering the creation of valuable tokens which could be text, images, robot control commands, or anything that drives intelligent actions.

At GTC 2024, NVIDIA unveiled a simulation of a colossal AI factory. This powerhouse would pack 32,000 of their GB200 super chips, boasting a mind-boggling 645 exaflops of computational power and 13 petabytes of fast memory. Beyond a mere simulation, this was a digital twin — a virtual replica — of the very first data center NVIDIA built for Amazon’s cloud computing service (AWS).

The excitement around NVIDIA’s Blackwell AI platform is undeniable. Over 40 companies are gearing up to leverage its capabilities, and all major cloud providers — Amazon, Google, Microsoft, and Oracle — are set to integrate GB200 processing units into their offerings.