Apple has released a research paper titled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity.” This paper challenges the prevailing assumption that AI reasoning models capable of generating detailed “thought processes” before providing answers are genuinely thinking.

While these models — such as OpenAI o1/o3, DeepSeek-R1, Claude 3.7 Sonnet Thinking, and Gemini Thinking — demonstrate significant capabilities in various reasoning benchmarks, their evaluations often focus on the accuracy of the final answer in solving mathematical and programming problems, neglecting the quality of the reasoning paths themselves. Apple believes this “evaluation gap” obscures the fact that these models might not be building true logical reasoning rules but are simply relying on pattern matching derived from their training data.

Do Large Reasoning Models Think or Just Match Patterns?

To understand the reasoning behavior of these models more accurately, Apple designed controlled test environments using classic logic puzzles. Unlike mathematical and programming problems, which often lack the ability to control experimental conditions, puzzles allow for precise manipulation of problem complexity and observation of the thought processes. Apple used four main puzzles for this purpose:

- Tower of Hanoi: A classic mathematical puzzle involving moving a stack of disks between pegs while following specific rules.

- River Crossing: A problem concerning moving objects (like a fox, chicken, and grain) across a river without violating certain constraints.

- Blocks World: A spatial reasoning challenge where blocks must be stacked in a specific configuration.

- Checkers Jumping: A puzzle requiring pieces to be moved strategically by jumping over others into empty spaces.

The results showed that Large Reasoning Models (LRMs) performed well on simple and moderately complex versions of these puzzles. However, their accuracy significantly degraded as complexity increased. Even more concerning, the models’ performance didn’t improve even when provided with explicit solution algorithms. For instance, in the Tower of Hanoi puzzle, despite being textually given the algorithm to follow (which consists of clear logical steps), the model continued to rely on its trained pattern-matching approach, and its results didn’t improve. This indicates that the models couldn’t apply logical step-by-step procedures to solve problems; instead, they relied on pattern recognition and probabilistic inference, leading to fragmented and inconsistent reasoning that completely breaks down beyond a certain level of complexity.

Limitations of the Study and Future Prospects

Apple acknowledges that these puzzles don’t cover the full spectrum of human thinking processes. Nevertheless, they provide valuable and measurable indicators of these models’ weaknesses and their diminishing reasoning capabilities as problems become more complex. The findings suggest that LRMs might not possess generalizable reasoning ability beyond specific complexity thresholds.

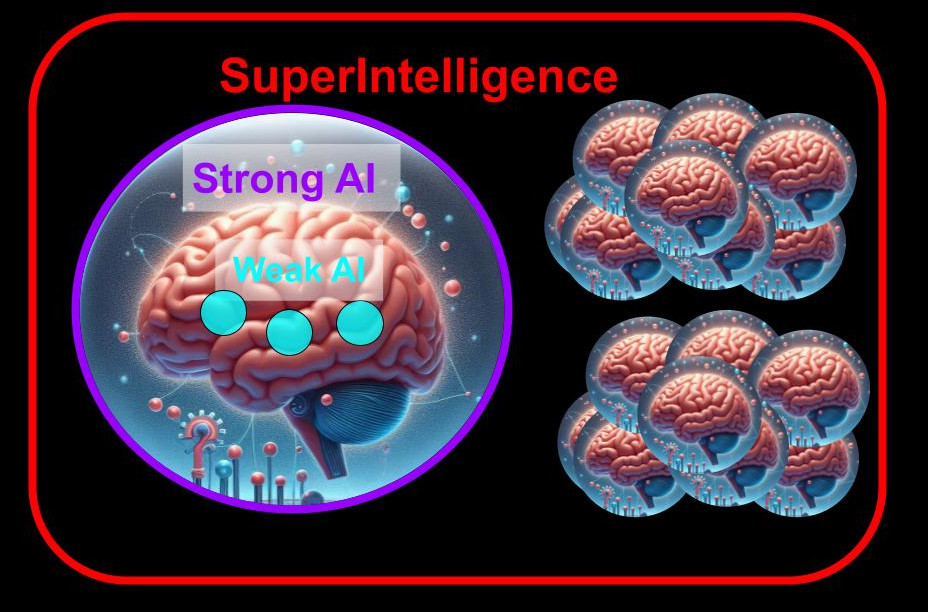

This study raises fundamental questions about the nature of “thinking” in AI. Are these models engaging in true reasoning, or are they merely sophisticated pattern-matching programs? Are current evaluation methods fair, or do they distort the capabilities of AI? These questions open the door for a broader discussion about the future of AI and Artificial General Intelligence (AGI), emphasizing the need for more research to understand these models’ true capabilities and to develop more comprehensive evaluation methods.