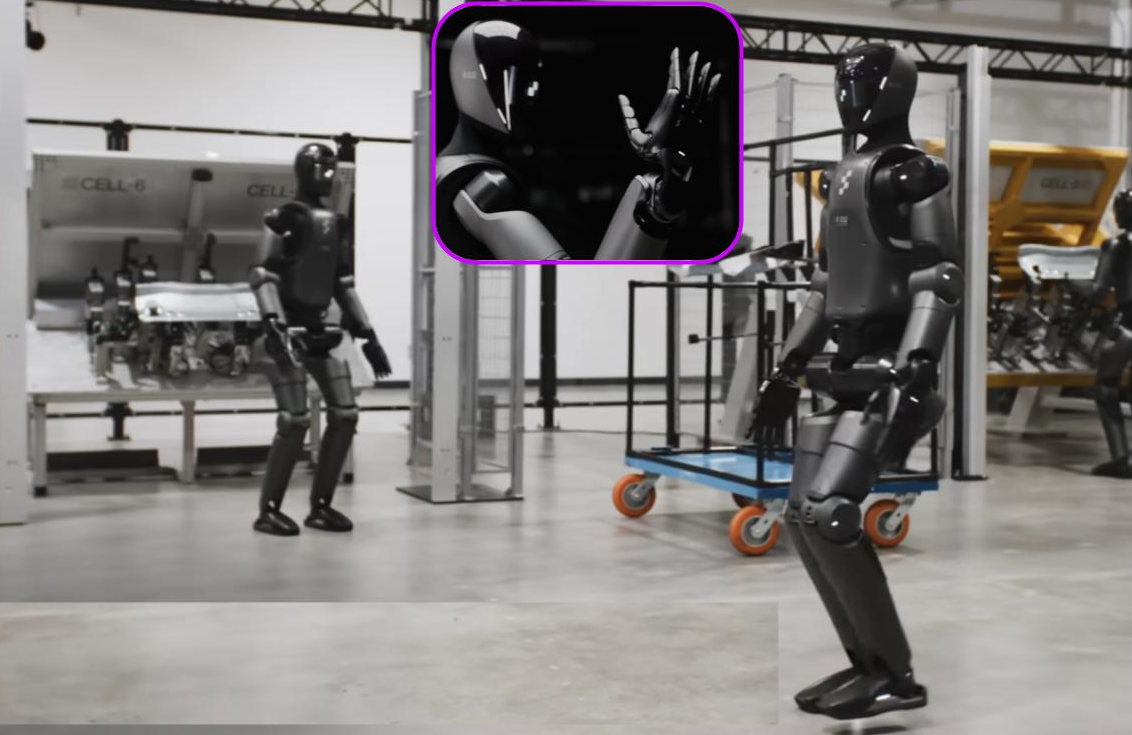

After OpenAI launched Sora , a text-to-video AI model that converts text into a high-quality video up to a minute long. A video of Meta Chief AI Scientist Yann LeCun went viral in which he said at Davos 2024 that he believes: “The future of AI is not generative.”

LeCun sees that building an artificial intelligence model that simulates the world (a world model) will not be generative. Even if generative models, like ChatGPT and Gemini, achieve success in understanding text, video will be more complicated. Which puts him against Sora, a generative model whose creators concluded that: “Scaling video generation models is a promising path towards building general purpose simulators of the physical world.”

LeCun’s s latest remarks may surprise those who have formed their understanding of AI based on advancements in generative models like ChatGPT, Midjourney, Dall-E, and others.

Therefore, it could be interesting to understand why these impressive generative models aren’t the future of AI or the path to achieve a world model [artificial general intelligence (AGI) OR human-like intelligence OR advanced machine intelligence, call it whatever you want]

Why does LeCun Think so?

LeCun clarified his position in a post on X, saying that the generative models are trained to generate specific things, such as part of a word or part of an image (pixels). Therefore, restricting the training of such models to be generative is as restricting the programming to the print statements [the output].

In LeCun’s opinion, it is better to guide the training objective to understand the meaning of the image it predicts, not its pixels. This means transferring the image to a space different from the space of pixels, let us say the space of concepts/meanings (latent space), so the representations of two images expressing the same concept appear close, even though their representations as groups of pixels may be very far apart.

In this way, we train the model to understand the meaning in the image rather than restricting it to generating a specific group of pixels. That will make it more comprehensive and have a better understanding of the world. Then, we can build models on top of it, like Sora, that could draw images in the pixels space.

Is LeCun Wrong?

LeCun’s vision is to create machines that can learn internal models of how the world works. To achieve this vision, he proposed an architecture, Joint Embedding Predictive Architecture (JEPA). Meta’s AI team recently published a research paper, V-JEPA, that uses this architecture for video. V-JEPA doesn’t generate beautiful videos, but it experimentally shows that LeCun’s architecture is able to understand the world better than previously studied generative methods.

Artificial intelligence is an experimental science and not just a type of philosophy. Theoretically judging this architecture is a preliminary step that is not significant. The true judgment is through experimental research that shows us the best architecture for building a world model.

That does not mean in any way that Sora AI model is not amazing at the level of video generation. But the question is bigger than generating nice videos: it’s about the possibility of making better world simulators if we use non-generative models like V-JEPA. That needs serious research and experiments to answer.