Meta unveiled new AI chip designed for Meta’s AI needs, MTIA v2 is the next generation of the Meta Training and Inference Accelerator (MTIA) unveiled last year. It focuses on accelerating deep learning tasks for ranking and recommendations, ultimately enhancing user experiences across various Meta applications.

Compared to MTIA v1, the new MTIA v2 chip delivers significant performance gains (3x faster) with improved energy efficiency. This boost comes from several advancements: increased internal memory (128MB vs. 64MB), a larger design with more processing elements, and a higher clock speed (1.35 GHz up from 800 MHz). However, this increased processing power does result in higher power consumption (90W vs. 25W).

Meta’s MTIA v2 chip marks a significant step towards reducing their dependence on Nvidia GPUs within their AI infrastructure. This advancement is part of a broader plan to develop custom, domain-specific silicon for their entire AI stack. By controlling the entire hardware and software pipeline (full-stack), Meta can achieve greater efficiency compared to relying on commercially available GPUs.

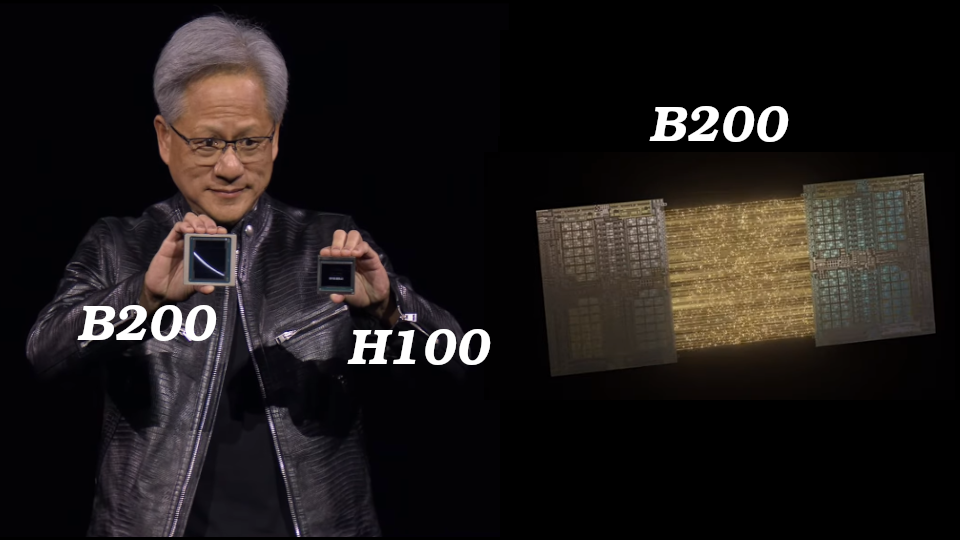

However, Meta does not seek to be completely detached from external GPUs, so MTIA chips work in collaboration with NVIDIA GPUs in existing infrastructure. Zuckerberg announced a major investment in January to acquire 350,000 Nvidia H100 GPUs by year-end 2024.

Nvidia then announced the Blackwell B200 chip, the next generation of the H100, which is the best graphics processing unit for AI today.

WHAT IS NEXT

Meta said that it will continue its effort not only to improve its own AI chips but also to invest in improving memory bandwidth, networking as well as hardware systems.