Elon Musk has announced that his AI startup, xAI, has finished building the world’s largest supercomputer for artificial intelligence. The computer is composed of an impressive 100,000 Nvidia H100 GPUs.

Musk revealed that his team accomplished this feat in just 4 months (122 days), thanks to Nvidia’s supply of GPUs and other vendors who contributed to building this massive facility. Musk has named it “Colossus,” a fitting moniker given its immense size. Musk further added that they plan to double its capacity to 200,000 GPUs in the coming months by incorporating 50,000 Nvidia H200 units.

Considering that each H100 GPU is priced at a minimum of $30,000, the cost of this facility in chips alone is approximately $3 billion, excluding other necessary equipment. Once doubled in size, the total cost would rise to around $6 billion, which is roughly equivalent to the amount Musk raised for the company a few months ago.

GPUs like the H100 play a crucial role in the success of large language models such as Gemini, ChatGPT, and Grok. They provide a massive infrastructure that allows for faster training. This infrastructure also enables the testing of numerous training options for multiple models, allowing researchers to select the optimal model. This is a critical aspect of deep learning, which underpins current AI models. The ability for engineers to experiment with different approaches increases the likelihood of producing a superior model.

Musk seems to recognize the significance of owning such a powerful machine in building advanced AI models. This AI supercomputer will allow him to outperform other companies in terms of the speed at which models are produced and improved upon when errors are discovered. For instance, when Google’s AI system, Imagen, struggled to generate human faces, it took several months to resolve the issue. While finding and fixing the problem might not have taken long, the infrastructure dedicated to training this model seemed to be modest. Certainly, no entrepreneur would want to be seen as lagging behind like Google.

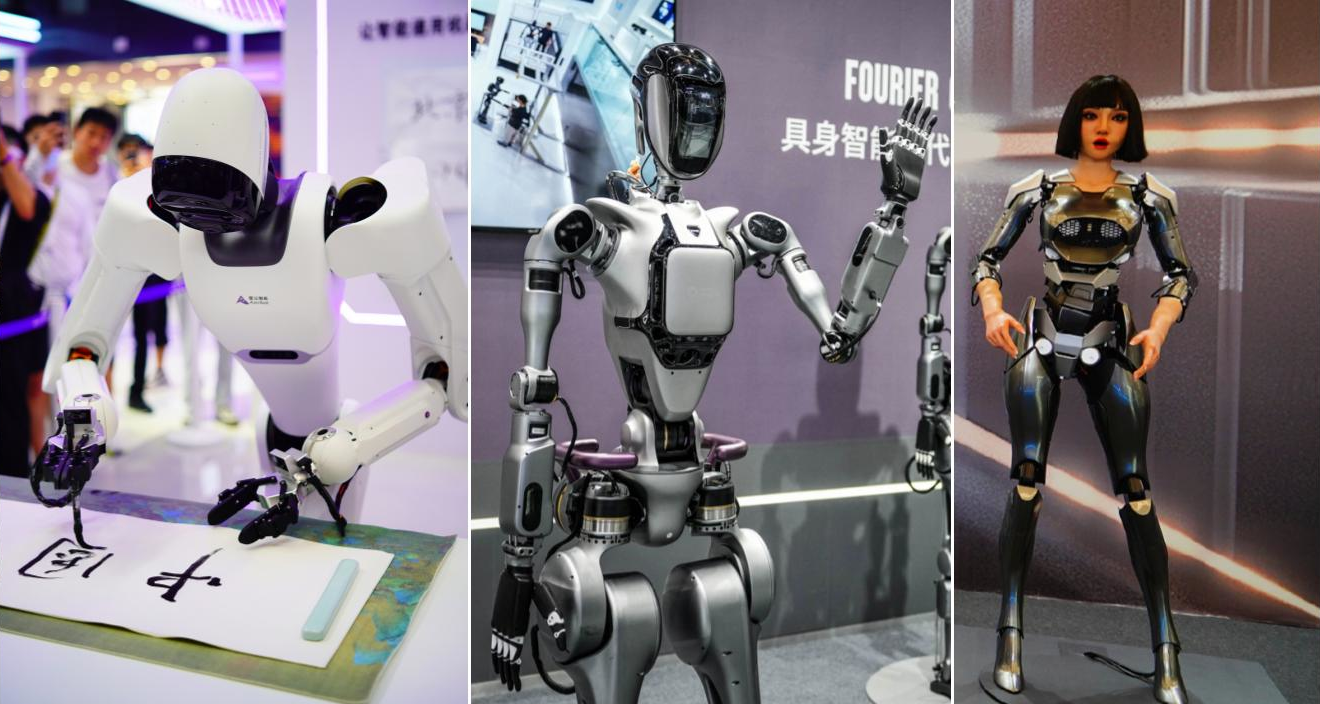

It remains uncertain whether “Colossus” is indeed the largest AI factory or supercomputer for AI training. However, it is likely to be the case. In April, Zuckerberg announced that Meta was using 35,000 H100 units and aimed to increase this number to 85,000 by the end of the year. Considering the vast number of models Meta develops and uses daily, Musk’s “Colossus” underscores the magnitude of his ambition to dominate the AI field and potentially build artificial general intelligence.