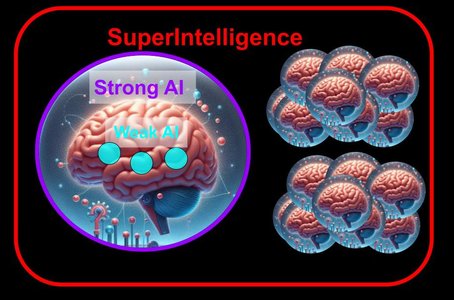

Artificial intelligence (AI) is a rapidly evolving field, and the terminology can be confusing even for those following it closely. This article clarifies the key differences between three major categories of AI: weak (narrow), strong (general), and superintelligence.

1. Weak AI (Narrow AI)

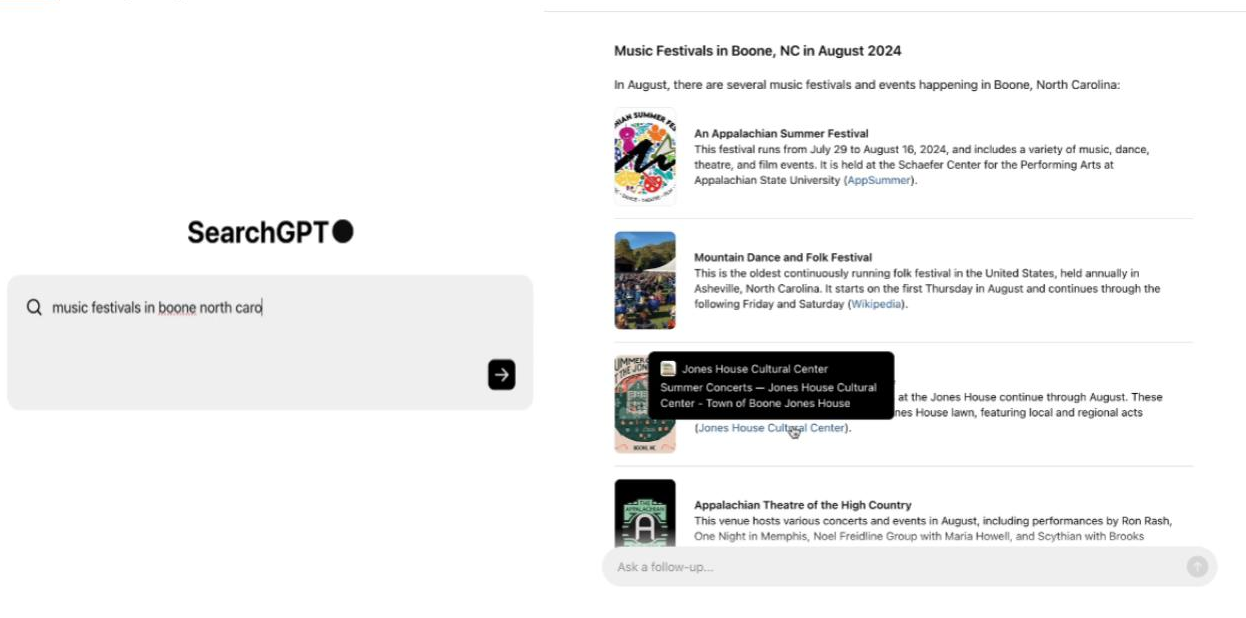

Weak artificial intelligence, also known as narrow AI, is designed to perform specific tasks. It’s trained to accomplish a particular task intelligently. This type of AI can learn and improve within its area of specialization, but it cannot apply its knowledge to entirely new situations that it hasn’t been trained for.

This type of narrow AI is common today in intelligent chatbots, self-driving programs, and programs that excel at chess.

2. Strong AI(General AI or AGI):

Strong artificial intelligence (also known as Artificial General Intelligence or AGI) represents the desired future of AI. It aims to achieve human-level or even superhuman intelligence, unlike weak AI which excels at specific tasks. Strong AI would possess a broad range of cognitive abilities, enabling it to think, learn, solve problems, and adapt to new situations just like a human being. Its work wouldn’t be limited to specific tasks it’s been trained on.

Strong AI (AGI), is still a theoretical concept, and research into achieving it is ongoing. Researchers often use the term AGI, while philosophers tend to favor strong AI. The term “strong AI” was coined by philosopher John Searle in 1980 to differentiate machines that can truly think from those that merely process information.

Writer and futurist Ray Kurzweil has predicted achieving strong AI by 2029. The head of Nvidia has predicted strong AI will be reached within 5 years, while Elon Musk has predicted it will arrive next year (2025) or in 2026. However, these timelines are highly speculative and remain a topic of debate.

3. Artificial Superintelligence (ASI)

ASI is a hypothetical level of intelligence that surpasses even strong AI (AGI). It goes beyond human intelligence in a vast array of domains. Imagine a machine that can not only learn and reason like a human but also do it at an unmatched speed and scale, with access to vast amounts of information to inform its decisions.

While the concept of ASI is still theoretical, some theories suggest it could possess the ability to continuously improve itself through learning from experiences and mistakes. This raises significant ethical concerns, as some fear ASI could reach a point where it becomes a threat to humanity.